In November 2014, the CMS Experiment at the Large Hadron Collider (LHC) released the first batch of research-grade data from the 2010 proton-proton collision run. This was an unprecedented move in the field of particle physics, since up until this point, access to data from hadron colliders was restricted to members of the experimental collaborations.

When I heard about the CMS Open Data project, I immediately downloaded the CERN Virtual Machine to see what kind of data had been made available. As a theoretical particle physicist, I can slam together particles and study their debris… on my chalkboard, or through pen-and-paper calculations, or using software simulations. For the first time, I had access to real collision data from a cutting-edge experiment, as well as an opportunity to demonstrate the scientific value of public data access.

It was not easy. In fact, it was one of the most challenging research projects in my career. But roughly three years later, my research group proudly published two journal articles using CMS open data in 2017, one in Physical Review Letters and one in Physical Review D. And from our experience, I can say confidently that the future of particle physics is open.

Putting Theory into Practice

In particle physics, there has long been a division between theorists like myself and experimentalists who work directly with collision data. There are good reasons for this divide, since the expertise needed to perform theoretical calculations is rather different from the expertise needed to build and operate particle detectors. That said, there is substantial overlap between theory and experiment in the area of data analysis, which requires an understanding of statistics, and data interpretation, which requires an understanding of the underlying physical principles at play.

One of the main reasons for restricting data access is that collider data are extremely complicated to interpret properly. As an example, the centre-of-mass collision energy of the LHC in 2010 was 7 TeV, and by conservation of energy, one should never find more than 7 TeV of total energy in the debris of a single collision event. In the CMS Open Data, however, we found an event with over 10 TeV of total energy. Was this dramatic evidence for a subtle violation of the laws of nature? Or just a detector glitch? Not surprisingly, this event did not pass the recommended data quality cuts from CMS, which demonstrates the importance of having a detailed knowledge of particle detectors before claiming evidence for new physics.

Because of these complications, progress in particle physics typically proceeds via a vigorous dialogue between the theoretical and experimental communities. An experimental advance can inspire a new theoretical method, which launches a new experimental measurement, which motivates a new theoretical calculation, and so on. While there are some theoretical physicists who have officially joined an experimental team, either in a short-term advisory role or as a long-term collaboration member, that is relatively rare. Thus, the best way for me to influence how LHC data are analysed is to write and publish a paper, and I’m proud that a number of my theoretical ideas have found applications at the LHC.

With the release of the CMS Open Data, though, I was presented with the opportunity to perform exploratory physics studies directly on data. My friend (and CMS Open Data consultant) Sal Rappoccio always reminds us of the apocryphal saying: “data makes you smarter”. This aphorism applies both to detector effects, where “smarter” means processing the data with improved precision and robustness, and to physics effects, where “smarter” means extracting new kinds of information from the collision debris. So while I didn’t know exactly what I wanted to do with the CMS Open Data when I first downloaded the CERN Virtual Machine, I knew that, no matter what, I was going to learn something.

Gathering a Team

The first thing I learned was somewhat demoralising, since, within the first few weeks, I realised that I did not have the coding proficiency nor the stamina to wrestle with the CMS Open Data by myself. While I regularly use particle physics simulation tools like Pythia and Delphes, the CMS software framework required a much higher level of sophistication and care than I was used to.

Luckily, an MIT postdoctoral fellow Wei Xue (now at CERN) had extensive experience using public data from the Fermi Large Area Telescope, and he started processing the 2 Terabytes of data in the Jet Primary Dataset (more about that later). Around the same time, an ambitious MIT second-year student Aashish Tripathee (now a graduate student at University of Michigan) joined the project with no prior experience in particle physics but ample enthusiasm and a solid background in programming.

So what were we actually going to do with the data? My first idea was to try out a somewhat obscure LHC analysis technique my collaborators and I had developed in 2013, since it had never been tested directly on LHC data. (It may eventually be incorporated into a hardware upgrade of the ALTAS detector, or it may remain in obscurity.) Wei was even able to make a plot (slide 29) for me to show in March 2015 as part of a long-range planning study for the next collider after the LHC. There is a big difference, though, between making a plot and really understanding the physics at play, and despite performing a precision calculation of this technique, it was not clear whether we could do a robust analysis.

In early 2015, though, I had the pleasure of collaborating with two MIT postdoctoral fellows, Andrew Larkoski (now at Reed College) and Simone Marzani (now at University of Genova), to develop a novel method to analyse jets at the LHC. While new, this method had a timeless quality to it, exhibiting remarkable theoretical robustness that we hoped would carry over into the experimental regime.

The Substructure of Jets

Jets are collimated sprays of particles that arise whenever quarks and gluon are produced in high-energy collisions of hadrons. Almost every collision at the LHC involves jets in some way, either as part of the signal of interest or as an important component of the background noise. In the 2010 CMS Open Data, the Jet Primary Dataset contains collision events exhibiting a wide range of different jet configurations, from the most ubiquitous case with back-to-back jet pairs, to the more exotic case with just a single jet (which might be a signal of dark matter), to the explosive case with a high multiplicity of energetic jets (which might arise from black-hole production).

While the formation of jets in high-energy collisions has been known since 1975 (and arguably even earlier than that), there has been remarkable progress in the past decade in understanding the substructure of jets. A typical jet is composed of around 10-30 individual particles, and the pattern of those particles encodes subtle information about whether the jet comes from a quark, or from a gluon, or from a more exotic object. Jet substructure continues to be an active area of development in collider physics, with many new advances made every year.

A fascinating feature of jets is that they exhibit fractal-like behaviour: as one zooms in on a jet and examines its substructure, one finds that the substructure itself has sub-substructure, which has sub-sub-substructure, and so on. This recursive self-similar behaviour is captured by the “QCD splitting functions”, which describes how a quark or gluon fragments into more quarks and gluons. (QCD refers to quantum chromodynamics, which is the theory that describes the interactions of quarks and gluons.) While the QCD splitting functions are well known and have been indirectly tested through a multitude of collider measurements, they had never before been tested directly.

In my 2015 research with Andrew and Simone, we found a way to unravel the recursive structure of a jet to expose the QCD splitting function. This method built upon a range of jet innovations over the years, including a jet (de)clustering method from the late 1990s, a jet grooming technique from 2008 that arguably launched the field of substructure, a refinement and recapitulation from 2013, and a powerful generalisation from 2014. The final method, while sophisticated in its implementation, is simple in its interpretation, since after decomposing a jet into it core substructure components, one can “see” the QCD splitting function… at least in theory.

Confronting the CMS Open Data

Could we expose the QCD splitting function using experimental data from the LHC? Wei and Aashish set out to parse the CMS Open Data while Andrew and Simone performed the corresponding theoretical calculations. Remarkably, just a few months later in August 2015, I was able to present very preliminary results from our analysis at the BOOST 2015 workshop in Chicago. Little did we know that it would take us another two years to actually get our analysis into a publishable form.

The CMS Open Data are released in the same format used by the majority of official CMS analyses. So, not surprisingly, we had to perform many of the same analysis steps used internally by CMS. Some of these steps were familiar to me from my theoretical investigations into jets, such as identifying jets using clustering algorithms, applying selection criteria to isolate the jets of interest, digging into an individual jet using a growing toolbox of substructure techniques, and making histograms of the results.

Most of our time, though, was spent trying to understand the myriad challenges faced in any experimental investigation into jets. While I had heard of most of these challenges by name, I had no first-hand experience dealing with them in practice. For example, since detector data can be noisy, we had to impose “jet quality criteria” to make sure we weren’t looking at phantom jets. Since detector data can be imperfect, we had to apply “jet energy correction factors” to account for missing information within the jets. We had to learn how to manage 2 TB of data, which is small enough to fit on a typical hard drive, but large enough that processing the complete dataset took two weeks on a single computer. (In retrospect, we probably should have leveraged MIT’s high-performance computing resources, but we decided to test the claim that “anyone with a laptop” should be able to analyse the CMS Open Data.)

By far the biggest challenge for us (and for most CMS jet analyses) was “triggering”. I mentioned above that the Jet Primary Dataset contains many different kinds of jet configurations, but I didn’t explain how exactly those configurations were chosen. The collision rate at the LHC is so high that there aren’t enough computing resources available to process all of the data that is collected, never mind the challenges of transmitting such large data from the detector to storage. Instead, CMS has a complex system of triggers that reject “uninteresting” events and select “interesting” events. The reason for the scare quotes is that triggers are indeed scary. There is a rather large menu of different possible event configurations that involve jets and other collider objects. If CMS made a mistake in deciding which events were “interesting”, then potentially valuable data could be lost forever. On the flip side, if CMS decided that too many events were “interesting”, then that could flood their computing systems with a deluge of useless information.

For our final analysis, we had to carefully sew together five different trigger selections, all of which changed over the course of the 2010 run. As an example, one of the triggers was named “HLT_Jet70U”. “HLT” stands for “High-Level Trigger”, which is the most sophisticated level of trigger selection. “Jet” means that there was just a single jet object used to define the trigger (even though most of the selected events contain two jets). One might think that “70” would mean that this trigger would select jets with an energy (strictly speaking, “transverse momentum”) above 70 GeV, but the “U” means “uncalibrated”, such that only when the jet energy was above 150 GeV was “HLT_Jet70U” guaranteed to work as expected. Through a long process of trial and error, we eventually figured out how to properly use the jet trigger information from CMS, which was essential for us to gain confidence in our results.

Ultimately, once we dealt with these experimental complications, we succeeded at exposing the QCD splitting function using the 2010 CMS Open Data. The results were perfectly in line with our theoretical expectations, providing a direct confirmation of the fractal structure of jets. Armed with this rich open dataset, we also performed a variety of additional substructure tests that were only possible because of the fantastic performance of the CMS detector. Coming full circle, Aashish presented our nearly-final analysis at the BOOST 2017 workshop in Buffalo: two years of effort summarised into a 20-minute talk.

Learning from the Community

While our two publications only list five authors (Aashish, Wei, Andrew, Simone and myself), our acknowledgements recognise around 40 experimentalists who generously offered their time, advice, and, in some cases, code. Without help from Sal Rappoccio, we would have struggled to figure out how to extract and apply the proper jet correction factors. Without help from the CMS Open Data team, including Kati Lassila-Perini and Achim Geiser, we would have never figured out how to determine the “integrated luminosity”, which tells you how much total data CMS had collected. Whenever I gave talks about our CMS Open Data effort, experimentalists in the audience would kindly point out some of our “rookie mistakes” (often made by starting experimental PhD students). We also benefitted from having a 2015 summer student Alexis Romero (now a graduate student at University of California, Irvine) test whether the CMS Open Data results agreed with those obtained from simulated LHC samples.

Most of the feedback we got from the experimental particle physics community was very positive. Though there was considerable initial scepticism that a team of five theoretical physicists could perform a publishable analysis based on open collider data, much of that scepticism dissipated once it became clear that our analysis was based largely on the same workflow used by CMS. Our analysis is by no means perfect, since there are places where we simply didn’t have the information (or the expertise) to address a known shortcoming. But I am proud that we applied a high degree of scrutiny to our own work, even though the final plots in our September 2017 publication are essentially the same as the ones I showed back in August 2015.

There were, however, a number of concerns raised by our work. Unlike analyses performed within CMS, our work did not have to go through the rigorous CMS internal review process. (Our papers were subject to peer review prior to publication, but that standard is not nearly as stringent as the one applied within CMS.) Unlike CMS members, we did not have to perform service work on the experiment to gain authorship status. (Some of my software tools have been incorporated into the CMS software framework, but that is a relatively small contribution to the overall CMS effort.) These issues are not specific to the CMS Open Data, though, and arise any time data are released into the public domain. Indeed, there is no guarantee that public data will be used correctly, and there is a risk that making the data public will make it less attractive for an experimentalist to join a collaboration.

In my view, though, the scientific benefits of making data public outweigh the scientific costs. With the CMS Open Data, there is a time lag of 4–5 years between when the data are collected and when it is made public. That time lag helps ensure that open data complements, rather than competes, with the needs of the CMS Collaboration. Moreover, open data are a stepping stone towards full archival access, such that even when the LHC is eventually decommissioned, the data will be preserved for future use. By making the data public, there is a chance to perform a back-to-the-future analysis like ours, where 2010 data, released in 2014, is analysed using a 2015 technique, for publication in 2017.

Interestingly, as we were pursuing our open data analysis, there was an official CMS analysis on a similar topic. Our analysis was based on proton-proton collisions from 2010, while the CMS analysis was based mostly on lead-lead collisions from 2015. Our analysis was an exploratory study of jet substructure, while the CMS analysis was far more ambitious, using jet substructure to probe the properties of a hot, dense state of matter called the quark-gluon plasma. One could cynically say that our analysis was stealing thunder from CMS, but I see these two studies as being synergistic, since we made different analysis choices that led to complementary physics insights. In this way, open data can enrich the dialogue between the theoretical and experimental particle physics communities.

Broadening the Open Data Effort

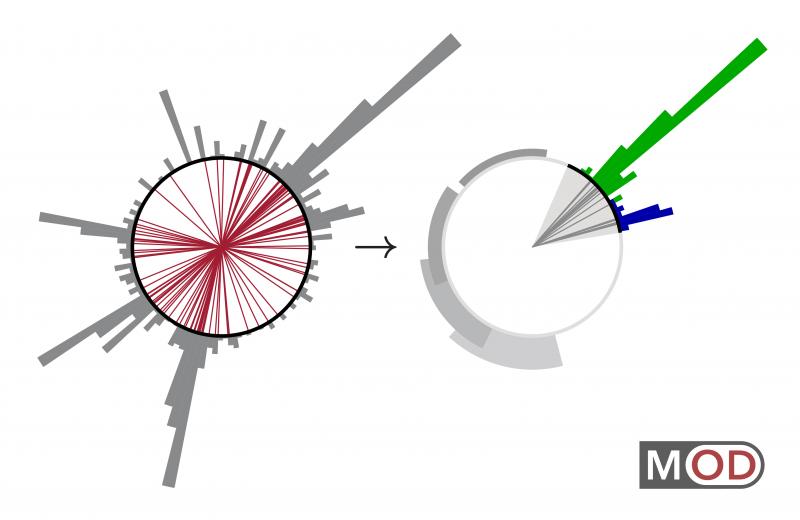

In addition to performing our own analyses, we are trying to make it easier for others to work with the CMS Open Data. For our jet substructure work, we found it beneficial to take the original CMS Open Data released in AOD format (“Analysis Object Data”) and distill it into a simpler MOD format (short for “modified”, backronymed to “MIT Open Data”). Because MOD files contain a strict subset of the AOD information, it helped us expedite the analysis workflow as well as avoid common pitfalls. Aashish developed two GitHub repositories to produce and analyse MOD files, and this code could be the basis for subsequent CMS Open Data studies. (That said, I do not recommend trying to use these tools in their present forms, since we are actively working to simplify them and make them more portable.)

In April 2016, the CMS Experiment released the second batch of open data from 2011 proton-proton collisions. This 2011 data set is far richer than the 2010 release, since it contains many more event categories as well as more information about detector performance. I have gathered a new team of theorists to work with the 2011 data, and I hope to report on that work sometime next year. Compared to our study with the 2010 open data, our upcoming analysis is simultaneously simpler (since it doesn’t directly involve jets) and more complex (since we are digging into more rarified collision properties). I don’t want to reveal the specific topic of our study, though, since short-term secrecy is sometimes needed to enable long-term openness (cf. the 4-5 year time lag for the CMS open data release).

Soon, the CMS Experiment will release the third batch of open data, this time from 2012, with hopefully enough information to reproduce the monumental discovery of the Higgs boson.

Beyond CMS Open Data, I am also looking for ways to use archival data from the ALEPH experiment. ALEPH was one of the four main experiments at the former Large Electron-Position (LEP) collider at CERN. LEP closed in 2000 such that the tunnel could be reused for the LHC. With the help of ALEPH collaboration member Marcello Maggi, we are taking ALEPH data from the 1990s and applying jet substructure techniques that weren’t even conceived of until 2008. While LEP data is very different from LHC data, I expect some of the lessons from our archival LEP studies to inform ongoing analyses at the LHC.

An Open Invitation

When I first started working with the CMS Open Data, people would often ask me why I didn’t just join CMS. After all, instead of trying to lead a small group of theorists with no experimental experience, I could have leveraged the power and insights of a few-thousand-person collaboration. This is true… if my only goal was to perform one specific jet substructure analysis.

But what about more exploratory studies where the theory hasn’t yet been invented? What about engaging undergraduate students who haven’t decided if they want to pursue theoretical or experimental work? What about examining old data for signs of new physics? What about citizen-scientists who might not have world experts on proton-proton and lead-lead collisions in the building next door? And what happens if I have a great new theoretical idea after the LHC has already shut down? These were the questions that motivated me to dig into the CMS Open Data, and I hope that they might motivate some of you to take a look as well. Our two publications are a proof of principle that open collider analyses are feasible and potentially impactful.

Ultimately, physics is an experimental science, and the aphorism that “data makes you smarter” holds at the most foundational level. It is true that theoretical insights have played a crucial role in solidifying the principles of fundamental physics. But almost everything we know for certain about the universe has originated from centuries of keen observations and detailed measurements. Without experimental data, physical principles would be mere speculations. With experimental data, we have an opportunity to expose the deepest structures of the universe… not just by scribbling on a chalkboard but by smashing together particles at ever-increasing energies.

When you decide to jump into the CMS Open Data yourself (and I hope you do), you will be confronted with this question: “I have installed the CERN Virtual Machine: now what?” However you answer this question, I am sure that you are going to learn something. And hopefully, you will teach the rest of us something, too.

The views expressed in CMS blogs are personal views of the author and do not necessarily represent official views of the CMS collaboration.